About Me

I am a research scientist and tech lead manager at Meta Reality Labs Research, leading a team of AI research scientists focused on advancing 3D computer vision, graphics, and imaging for immersive XR technologies. Before joining Meta in 2017, I earned my Ph.D. in Computer Science with a focus on computational imaging from the University of British Columbia.

Most recently, I have been working on generative video and 3D models for codec avatars. My past research focused on neural rendering for real-time graphics, and 3D computer vision including view synthesis, immersive videos, telepresence, 3D/4D reconstruction and generative content. My work has been published in top-tier venues, including SIGGRAPH, SIGGRAPH Asia, CVPR, ICCV, ECCV, NeurIPS, etc, and has been featured in the Meta Connect keynote, Oculus Blog, demos to Meta CTO and various media outlets. I have also had the privilege of giving invited talks at NVIDIA GTC, Stanford SCIEN talk series, and the CV4MR workshop at CVPR. Please see my publications below for details.

Beyond core algorithm research, as a tech lead, I collaborated with a multidisciplinary team of software engineers, hardware engineers and technical artists, to design and develop real-time, end-to-end prototype demonstrations. Our published work includes gaze-contingent rendering for varifocal VR headsets, real-time perspective-correct MR passthrough, real-time supersampling for high-resolution VR, and ultra-wide field-of-view MR passthrough.

Before I joined Meta, during my PhD study under supervision of Prof. Wolfgang Heidrich at UBC, I worked on computational photography and imaging, including image restoration, superresolution, time-of-flight imaging, non-line-of-sight imaging, etc.

Selected Publications

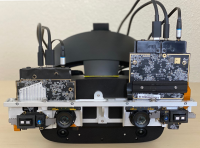

Wide Field-of-View Mixed Reality

MR passthrough headset prototype with an ultra-wide field of view — approaching the limits of human vision — designed to create a seamless, almost invisible headset experience

SIGGRAPH 2025 Emerging Technologies | Media Report | Media Report on a previous iteration of our prototype that Meta CTO shared publicly

DGS-LRM: Real-Time Deformable 3D Gaussian Reconstruction From Monocular Videos

Real-time transformer for dynamic scene reconstruction

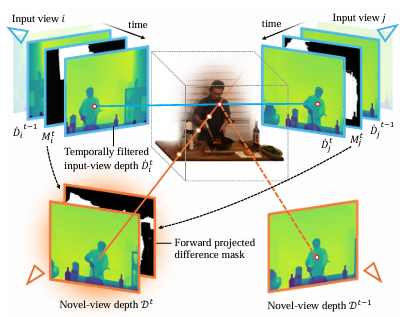

Geometry-guided Online 3D Video Synthesis with Multi-View Temporal Consistency

Online novel view synthesis of dynamic scenes from multi-view capture, a step towards real-time telepresence

LIRM: Large Inverse Rendering Model for Progressive Reconstruction of Shape, Materials and View-dependent Radiance Fields

Large model for high-quality inverse rendering

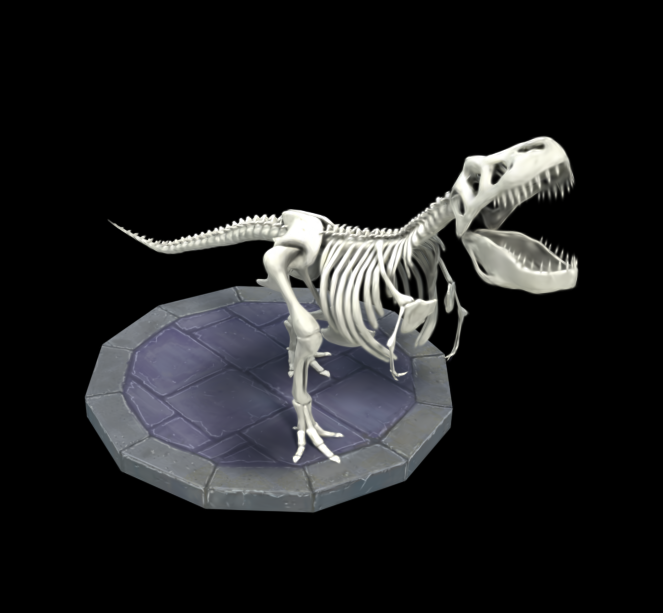

ReplaceAnything3D: Text-Guided 3D Scene Editing with Compositional Neural Radiance Fields

Text-guided, localized editing method that enables object replacement in a 3D scene

GauFRe: Gaussian Deformation Fields for Real-time Dynamic Novel View Synthesis

View synthesis of dynamic scenes from monocular videos using learned deformable 3D Gaussian Splatting

TextureDreamer: Image-guided Texture Synthesis through Geometry-aware Diffusion

Transfers photorealistic, high-fidelity, and geometry-aware textures from sparse-view images to arbitrary 3D meshes

AlteredAvatar: Stylizing Dynamic 3D Avatars with Fast Style Adaptation

Stylize a dynamic 3D avatar fast

Tiled Multiplane Images for Practical 3D Photography

Efficient 3D photography using tiled multi-plane-images

Temporally-Consistent Online Depth Estimation Using Point-Based Fusion

Online video depth estimation of dynamic scenes via a global point cloud and image-space fusion

NeuralPassthrough: Learned Real-Time View Synthesis for VR

The first learned approach to address the passthrough problem, achieving superior image quality while meeting strict VR requirements for real-time, perspective-correct stereoscopic view synthesis

SNeRF: Stylized Neural Implicit Representations for 3D Scenes

Transfer a neural radiance field to a user-defined style with cross-view consistency

Neural Compression for Hologram Images and Videos

Effective compression method for holograms

Deep 3D Mask Volume for View Synthesis of Dynamic Scenes

High-quality view synthesis of dynamic scenes for immersive videos

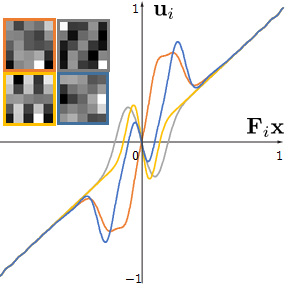

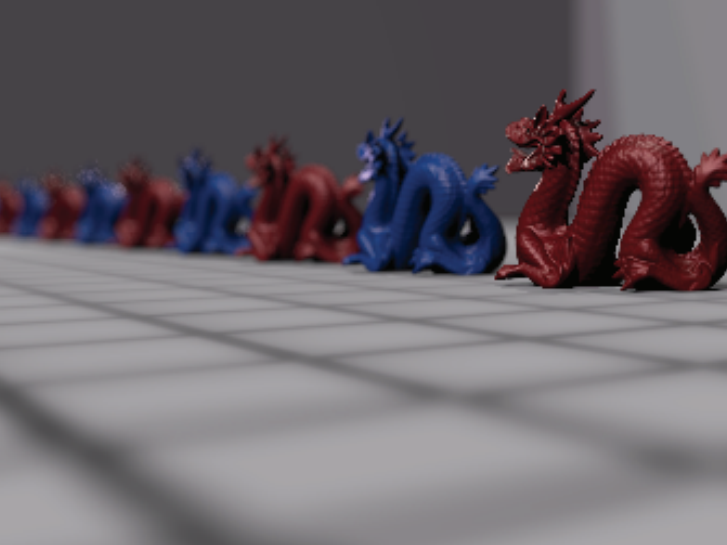

Neural Supersampling for Real-time Rendering

The first neural rendering technique for high-fidelity and temporally stable upsampling of rendered content in real-time applications, even in the highly challenging 16x upsampling scenario

DeepFocus: Learned Image Synthesis for Computational Displays

The first real-time neural rendering technique to synthesize physically-accurate defocus blur, focal stacks, multilayer decompositions, and light field imagery using only commonly available RGB-D images

SIGGRAPH ASIA 2018 | Oculus Blog| Oculus Connect Keynote | Media Report

Discriminative Transfer Learning for General Image Restoration

Learning High-Order Filters for Efficient Blind Deconvolution of Document Photographs

Defocus Deblurring and Superresolution for Time-of-Flight Depth Cameras

Stochastic Blind Motion Deblurring

Imaging in Scattering Media Using Correlation Image Sensors and Sparse Convolutional Coding

Temporal Frequency Probing for 5D Transient Analysis of Global Light Transport

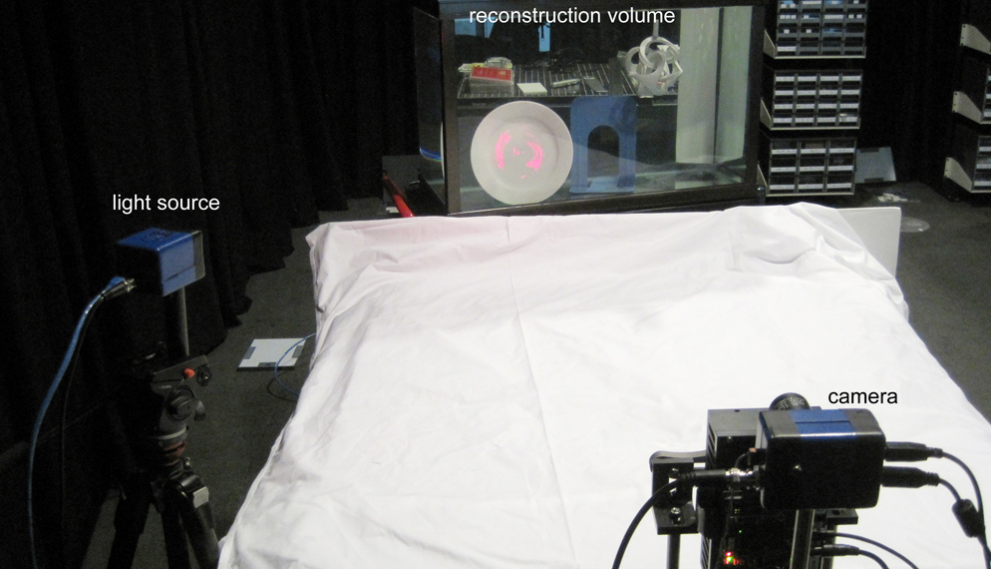

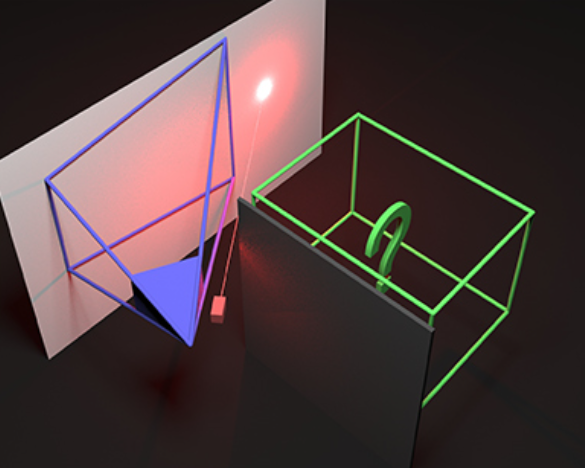

Diffuse Mirrors: 3D Reconstruction from Diffuse Indirect Illumination Using Inexpensive Time-of-Flight Sensors

Compressive Rendering of Multidimensional Scenes

Video Processing and Computational Video, LNCS 7082, Springer, 2011